AI Content Moderation for Enterprise & Organizations

Text Moderation is an AI-driven content moderation platform that helps businesses and institutions keep user-generated content safe and compliant with policies. It provides real-time text moderation via API integration to automatically flag and filter harmful or policy-violating content across your platform.

Content reviewers & trust and safety teams

Publishers & media platforms

Social media and UGC app moderators

Review platforms and community feedback tools

Discussion threads, forums, and online communities

Teams curating or moderating training data for LLMs (Large Language Models)

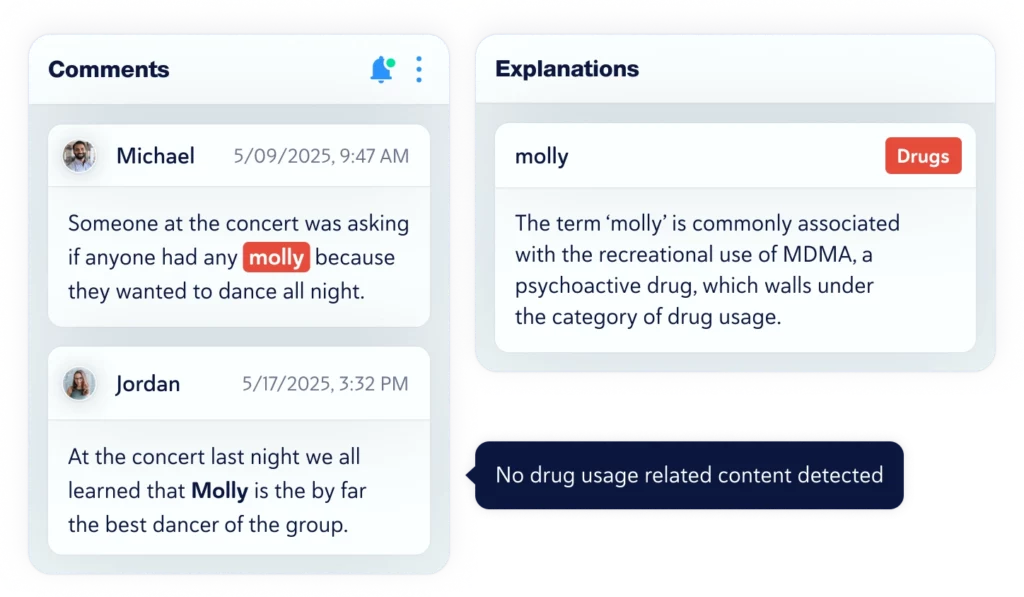

Contextual Awareness For More Accurate Harmful Content Detection

Boost Productivity—Not Your Workload

With AI-enabled contextual awareness, our content moderation software reduces false positives while still capturing harmful content that keyword-based tools often miss.

Customize Your Concerns and Workflow

Tailor the system to your specific content policies. You can choose which categories of content to monitor and enforce – Violence, Harassment, Hate Speech, etc. The moderation API returns real-time violation detection for the categories you select.

Explanations for Flagged Content

Text Moderation will go beyond category labels and provide explanations for why content was flagged. Instead of only seeing “Violent” or “Hate Speech,” you’ll understand the exact context behind each flag, empowering moderators to make faster, more informed decisions.

Custom Labeling for Text Moderation

Create and apply your own custom labels to detect exactly what matters to you. Whether you need to flag real estate listings mentioning “balcony” or spot industry-specific terms across thousands of documents, you’ll be able to sort, filter, and aggregate text based on your unique criteria. It’s moderation and search, tailored entirely to your business needs.

Understand the Language.

Not Just the Words.

Ready to see how contextual aware content moderation can transform your business? Copyleaks Text Moderation is an AI-driven solution that provides real-time moderation, automatically flagging harmful or policy-violating content via a simple API integration. Get a demo to see how effortless it is to build a safer, more compliant community.

Seamless Text Moderation API Integration

Our Text Moderation API easily integrates into existing platforms and workflows. Developers can plug the API into websites, forums, or LMS platforms to automatically screen content in real time. The API supports over 10 content categories, all detected with confidence scoring, and returns structured results.

Text Moderation API

Designed to Enhance AI and Plagiarism Detection

Already using AI Detector or Plagiarism Checker? Our Text Moderation API ensures that content produced by AI continues to align with content safety policies for original text.

Together, these tools offer a comprehensive content moderation solution for protecting your organization’s integrity and ensuring platform safety.

Request a Demo

See text moderation in action. Our sales team is ready to answer your questions.

Frequently Asked Questions

How does Copyleaks Text Moderation reduce false positives?

Instead of relying on keyword lists, Copyleaks uses contextual AI to understand how language is being used. This means it can distinguish between words or phrases that are harmful versus those that are not, reducing unnecessary flags and manual reviews. For example, it recognizes the difference between a violent threat and a casual phrase like “this game is killer.”

What types of content does Copyleaks Text Moderation flag?

Text Moderation tags support a wide range of harmful content, including:

- Adult

- Toxic

- Violent

- Profanity

- Self-harm

- Harassment

- Hate speech

- Drugs

- Firearms

- Cybersecurity

You can customize which categories you monitor to align with specific policies and risk levels.

Will I see explanations for flagged content?

Later this year, we will roll out full explanations, where each flagged content item will include in-text highlights and clear explanations that describe why it was flagged as harmful, toxic, or against platform policies. This will help text content moderators to act quickly and consistently, and provide valuable context for training review teams or refining your organization’s content policies.